Blog

GPT Chess

Preface

There's lots of people saying lots of things about LLMs, and I'm underqualified to give very much input on the subject. My primary interest is to attempt to understand the extent to which I interpret LLMs to be "reasoning". I just want to emphasize that this is a very very unscientific heuristic, I'm mostly interested in how well the models play, and how they explain their "thought" process. Now you can read me ramble over here, but the games are proably slightly more fun, I made a little study with the ones I played: GPT Chess Study.

I will say, whether or not I happen to think of LLMs as "reasoning" isn't very important to anything, and it doesn't make any claim about whether or not they will be productive tools or a complete waste of time or any of that. I'm just trying to think for myself about whether or not I think LLMs are reasoning. I think of most machine learning kinda like those vacuum moulding they do for the weather-proof floor mats for cars. They have a metal mould that is firm and the precise shape they want, they get the rubber-material hot, and then rapidly suck all the air out, so the rubber collapses around the mould. The whole reason we use machine learning is when we have lots of input and output data for a process that we don't fully understand how to automate directly. The training phase is sucking all the air out, and letting the system collapse around whatever function relates that input data to the output data. In my interpretation, the big question is, "is human-level reasoning necessary?" Is it firm enough in that function for the LLMs to collapse around it, without crushing it flat. Can you capture "reasoning", or are we destined to capture something else, like the terrain of the language. I tend to think it's something else, but maybe, one day it'll be undeniable.

Why?

Mostly, I think chess, at least from the perspective of an LLM, is much less of a "search" use case. So far I've been most impressed by LLM's in situations where they are directly competing with search engines or knowledge resources. They seem to do a great job turning these very long "prompts" (which I think of as queries, in this context) and produce at least plausible answers. This makes them pretty good at generating boilerplate code, or examples of solutions. However, I'm something of a skeptic when it comes to the "reasoning" claims. I think sufficiently complex "search" results can seem indistinguishable from "reasoning", and I think chess makes you reason. Despite the billions of games that have been played, (which is about as many as the large models have trained on), we still create a whole new game *almost* every time we play.

Now, what I really expect, is that once a machine can "reason" at human level, it will be able to play chess very well, and it will be able to explain its own thoughts accurately. In some respects, the strength is less interesting to me, plenty of smart people would miscalculate if thrown into a chess game, but they can tell me why they thought a particular move would work, and we could talk about what they missed.

Process

Last time I did this, I just bought the ChatGPT subscription and told it moves and made them over a board. However, some nice people have made some cool resources that make my life a bit easier now. I've been using: https://dubesor.de/chess/, which is a little website that lets me plug in an API key, and just play the game. This does come with a little drawback, in that I don't know exactly how the model is prompted, I just see moves and "reasoning". For example:

Black Move 1: d5 (gpt-5-mini) Reasoning: White has

played 1.d4, so contesting the center immediately is principled.

1...d5 mirrors White's pawn move, fights for the center, and

allows natural development of Black's pieces (e.g. ...Nf6, ...c6

or ...e6 later). It leads to solid, symmetrical play and avoids

unnecessary weakening moves. Therefore 1...d5 is the most

straightforward and reliable response.

This was GPT-5-mini's explanation for it's first move. Get used to the word "principled", if you play these models in chess. They seem to have clocked (correctly) that the word "principled" occurs dramatically more often around chess than it does in most other contexts.

"Results"

High level, I think they are getting a lot better at chess, the actual playing strength of GPT-5 was relatively impressive (full model not nano/mini). The website has a leaderboard which suggests that the codex variant is quite a bit stronger, but I couldn't get it to play nice on the website. I will say, I wouldn't put much stock in the actual ELO ratings, they used Stockfish to measure the accuracy, and estimate ELO that way, and adjusted them by playing each other. I'm not sure they have enough games against a large enough pool for those ELOs to line up (and then there's the problem of which ELO they'd best compare to Chess.com/Lichess/USCF/FIDE all that). But they should give a good idea about relative strength and a nice range, GPT-5-codex appears to be an intermediate level player (not unlike myself), and that's about the peak for LLMs at the moment.

That's not a criticism by the way, it's just very expensive to get these models to play meaningful numbers of chess games.

GPT-5 played like 15 pretty reasonable moves, but I was more or less completely winning, and it did stop being able to make moves after that point. I think the app does like 3 retries by default and I retried manually about 10 times after that, and just never got moves back out of it.

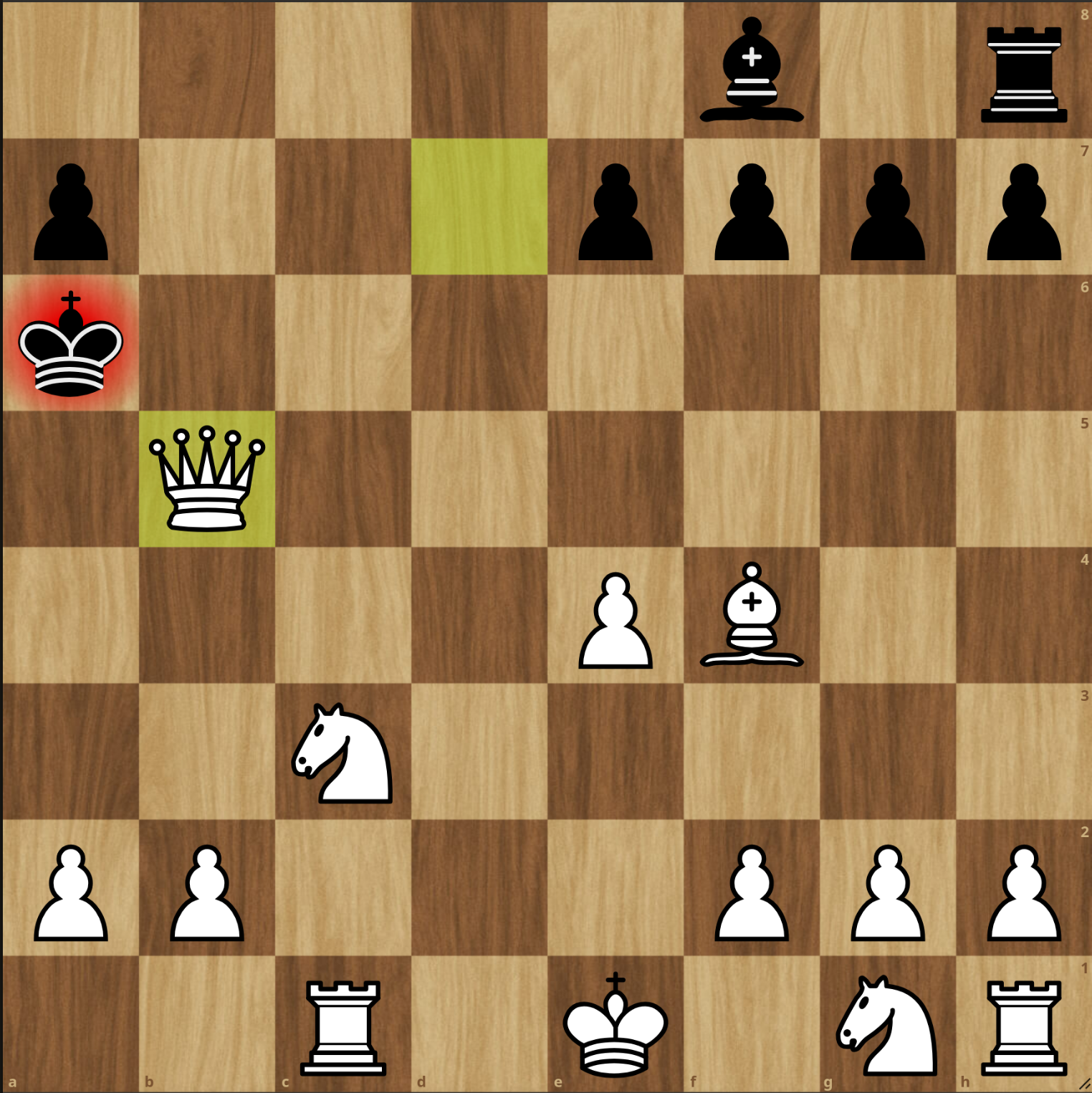

Impressive, but the real reason I remain skeptical of LLM "reasoning", is that the justification is regularly just pretty wildly wrong. Not just because it misses tactics, humans do that too, but more that it just says things that don't relate to the game. I'll give one example, we were playing a Queen's Gambit, and GPT-5 decided to accept the gambit on move 4, putting us in this position:

It gave the explanation for dxc4 that it was "freeing up it's light-squared Bishop", but the Bishop was already freed, and dxc4 wouldn't have freed it, if it hadn't been already. It's just a complete disconnect between playing like a 1500 or whatever, and not being able to explain the reasoning. I just don't think this is very analogous to human reasoning, it's more like splating out a bunch of text that biases the result, but it's just so much looser than "real" reasoning.

Anyway, I played full blown GPT-5, GPT-5-mini and o4-mini, mostly because the full-blown game took like 2 hours, so the smaller models are just much more practical to play against. This dumb hueristic hasn't yet convinced me that LLMs are really reasoning, and if they can't build a complete "mental model" of chess, I'm pretty skeptical they have built a full "world model" either.

GPT-5 Final Position

o4-mini Final Position

GPT-5-mini Final Position